Caching is basically the storage and reuse of frequently accessed resources on web pages. As the storage is done in a place of quick access, navigation is faster, which also improves the performance of websites and applications. That’s why HTTP Caching is an essential tool for businesses that want to optimize the user experience and, consequently, increase their revenue.

Every Millisecond Counts

If you were asked what annoys you the most about a website, you’d probably answer that it’s a slow website. And you’re not alone: 70% of consumers say the speed of displaying a web page affects their willingness to make an online purchase. The slow delivery and rendering of a web page impacts user experience, which results in a lost opportunity for customer engagement and sales.

Since we are living in the age of immediacy, anything that escapes from that in the virtual world is synonymous for failure. Google and other search engines don’t forgive slow websites when ranking searches, imagine the user’s experience and time consumption when waiting to view and navigate your website.

In times of high demand, an effective way to make a website faster is the use of caching. Have you ever heard of it? In this blog post, we explain what caching is and how it works.

What Is Caching?

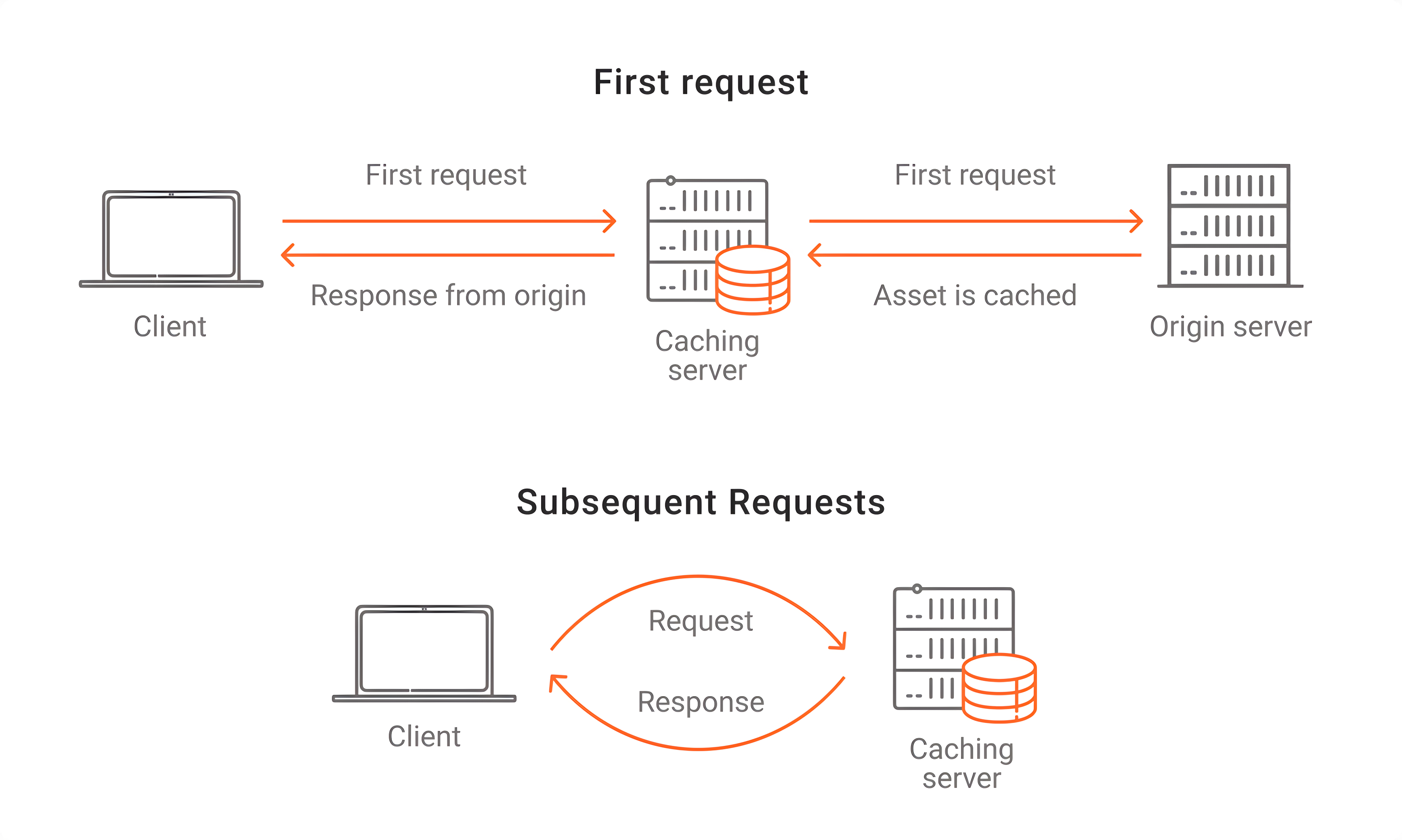

We’ve seen that caching is the storage and reuse of a website’s resources (images, HTML text, graphics, URLs and scripts) that are frequently accessed, preventing them from being downloaded every time the page is visited. To make caching happen, we use a cache, which is like a repository of resources – in the case of a web page, the cache can be in the browser, proxy server, edge nodes, etc. – closer to the user than an origin server. So, when someone accesses a website, the system accesses the cache to check if there are copies of the website’s resources stored there, and if so, it retrieves them much faster because it doesn’t need to get it from the original source. If no copy of these resources exist in the cache, the request will pull and store the required resources to the cache server.

The use of cache is done through the information transmitted by the header of the request and response. Through caching it’s possible to reduce the number of round-trips made in a request, as it checks if there are cached copies of what was requested. The decrease in the amount of round-trips reduces the time of requests and, consequently, the network traffic. It’s like a ripple effect, but in a good way, because all of this together contributes to lower latency and lower server costs.

The use of cache is part of the HTTP protocol when transmitting data, so let’s see what this is specifically about.

What Is HTTP Caching?

To better understand what HTTP caching is, let’s remember what HTTP is. In short, HTTP is a text-based application layer transfer protocol and is considered the basis of data communication between network devices, that is, between clients and servers. In HTTP communication process directives can also be given to define how the exchanged information will be stored and this is then what we call HTTP caching. This information about caching occurs in the request and response headers and they define the behavior desired by the client or server. We can also say that the main purpose of caching is to improve communication performance by reusing a previous response message to satisfy a current request.

How Does HTTP Caching Work?

In its most basic form, the caching process works as follows:

- The website page requests a resource from the origin server.

- The system checks the cache to see if there is already a stored copy of the resource.

- If the resource is cached, the result will be a cache hit response and the resource will be delivered from the cache.

- If the resource is not cached, cache loss will result and the file will be accessed in its original source.

- After the resource is cached, it will continue to be accessed there until it expires or the cache is cleared.

Types of Caching

The type of cache is defined according to where the content is stored.

- Browser cache - this storage is done in the browser. All browsers have a local storage, which is usually used to retrieve previously accessed resources. This type of cache is private since stored resources are not shared.

- Proxy cache - this storage, also called intermediate caching, is done on the proxy server, between the client and the origin server. This is a type of shared cache as it’s used by multiple clients and is usually maintained by providers.

- Gateway cache - also called reverse proxy, it’s a separate, independent layer, and this storage is between the client and the application. It caches the requests made by the client and sends them to the application and does the same with the responses, sending from the application to the client. If a resource is requested again, the cache returns the response before reaching the application. It’s also a shared cache, but by servers not users.

- Application cache - this storage is done in the application. It allows the developer to specify which files the browser should cache and make them available to users even when they are offline.

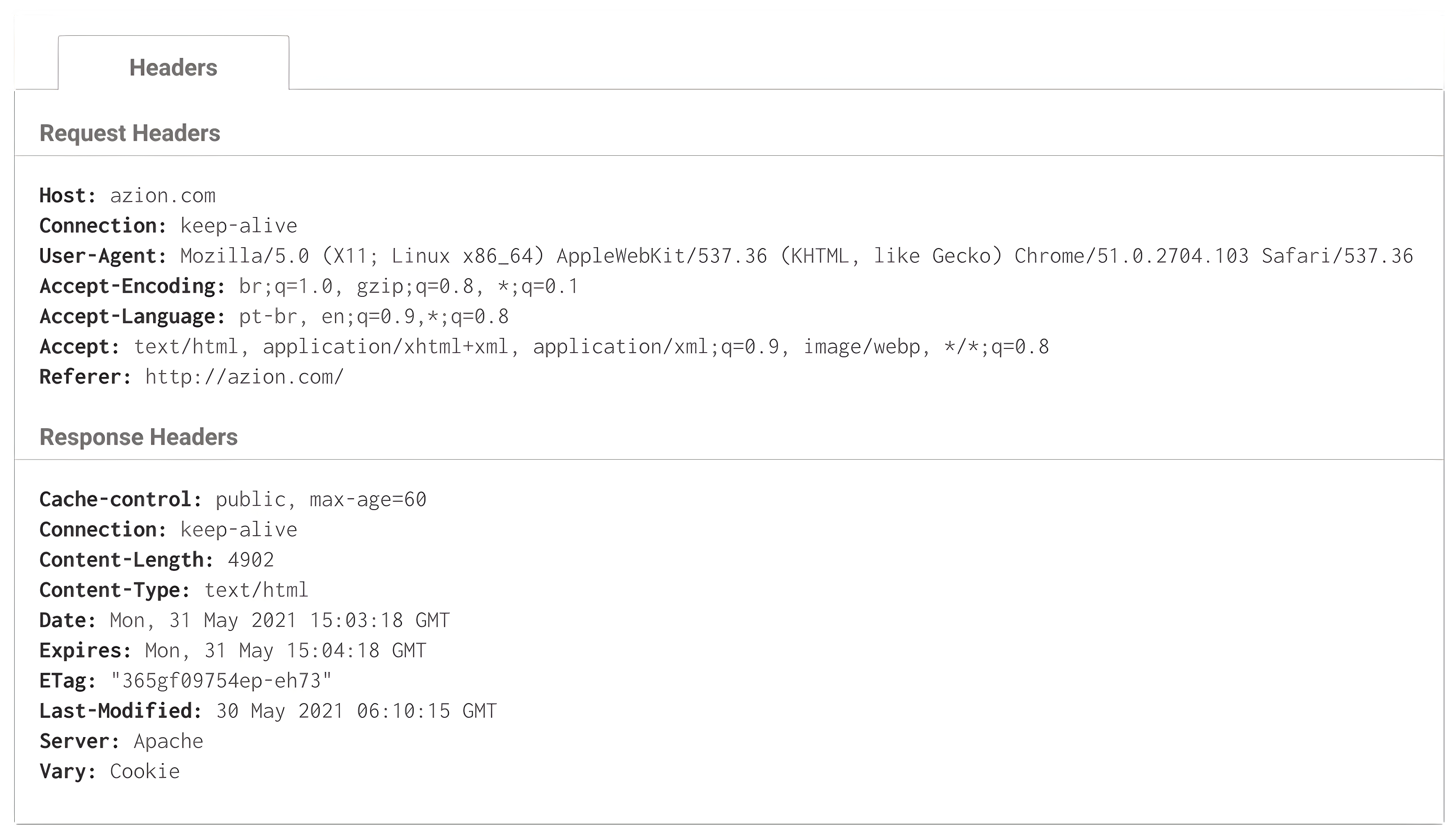

Caching Headers

In the request and response headers, directives are given to define caching characteristics. For example:

Cache-Control

In the Cache-Control header, the following standard directives can be given for caching:

- private

Content is considered private as only one user has access. In this case, private content can be stored by the client’s browser but not by intermediate caches.

- public

Content is considered public as more than one user can have access. Content can be stored by the browser or in other caches between the client and server.

- no-store

Content can’t be cached, so the request is always sent to the origin server. This format is indicated when transmitting confidential data.

- no-cache

The cached content must be revalidated in each new request, and this makes the content immediately obsolete. In this case, the cache sends the request to the origin server for validation before releasing the stored copy.

- max-age

Defines the maximum amount of time content can be cached without being revalidated on the origin server. The time is defined in seconds and the maximum is one year (31,536,000 seconds).

- s-maxage

Indicates the amount of time content can be cached and is therefore very similar to max-age, but the difference is that this option only applies to intermediate caches but not the browser.

- max-stale

Indicates that a response that has exceeded its freshness lifetime will be accepted. If no value (given in seconds) is assigned to max-stale, a stale response of any age will be accepted, but if a value is assigned, a response that has exceeded its freshness lifetime by the time specified for the limit is accepted.

- must-revalidate

Indicates that the expiration time, indicated by max-age, s-maxage or Expires, must be obeyed. In this case, the stale content must not be delivered to the client, and the browser can only use stale content if a network failure occurs.

- proxy-revalidate

This directive is similar to must-revalidate but is only configured for intermediate servers, proxies or CDNs. In this case, these caching services must revalidate the content if it becomes stale.

- no-transform

Defines that the cache can’t modify the received content. For example, the cache can’t choose to send a compact version of an uncompressed content that it received from the origin server.

- min-fresh

Defines that the content must be kept fresh for at least the lifetime that was specified (in seconds).

- only-if-cached

Indicates that the client only wants content that has a cached copy. The cache must use the cached content in the response if it complies with the restrictions, otherwise it responds with a 504 status code (Gateway Timeout), which indicates that the intermediary server didn’t get a response from the origin server to complete the request.

It’s possible to combine different cache behaviors in the headers, but there are two cases where only one option is possible since they are opposite: 1) no-cache or no-store and 2) private or public.

Expires

The Expires header defines when a content will expire. After the specified time, the cached content is considered stale, so the request will have access to the latest content on the origin server.

ETag

The Etag (Entity tag) header is used to verify if the browser’s cached resource is the same as the one on the origin server. That is, it validates if the client is receiving the latest version of the cached content. This header works as a unique identifier associated with each resource on a website. For identification, web page servers use an Etag value, which is modified each time the resource is changed. The ETag value is the date and time of the last update of the resource.

Last-Modified

The Last-Modified header shows the browser when the resource was last modified and whether it should use the cached copy or download the latest version. For example, when a user visits your website, the browser stores the page’s resources, so the next time they access the website, the server checks if the files have changed since the last time they were accessed. If there are no modifications, the server sends a “304 not modified” response to the browser and the cached copy is used.

Vary

The Vary header makes it possible to store different versions of the same content, so it’s used to request cache to check additional headers before deciding which content is a request for. For example, when used with the Accept-Encoding header, the setting allows you to differentiate compressed and uncompressed content, or when used with the User-Agent header, it differentiates the version of a website for mobile or desktop.

Benefits of Caching

Summing up, we have listed the main benefits of caching below:

- latency reduction;

- bandwidth consumption reduction;

- network traffic reduction;

- website speed and performance increase.

Azion’s Solution

Users have very high expectations regarding the speed of websites, and a response that doesn’t meet their expectation is synonymous with losing customers. This was proven by a report made by Deloitte in 2020, Milliseconds Make Millions, which shows us that a 0.1s faster speed brings various benefits to the business, such as increased user engagement, better conversion rates and, consequently, sales growth.

As we’ve shown in this post, HTTP caching is extremely relevant in optimizing the speed of a website and vital to improving the perception of a company’s brand, especially if you want to increase your customer base or keep them loyal to your brand. And how can you achieve this? It’s simple: with Edge Cache.

Understanding Edge Caching and Its Benefits

Edge caching improves website performance by storing content closer to end users, reducing latency and enhancing load times. This approach minimizes the need for repeated requests to the origin server, allowing for faster content delivery and improved scalability, even during high traffic periods.

A caching system at the network edge supports thousands of simultaneous requests without overloading infrastructure. It can efficiently serve various HTTP and HTTPS-based content types, including static files and live or on-demand video streams. Even if the origin server experiences downtime, cached content remains accessible, ensuring greater availability and reliability.

Edge caching typically operates using a reverse proxy architecture, where client requests are routed through a globally distributed network of edge nodes. This setup allows content to be cached closer to users, reducing the distance data must travel. Additionally, advanced caching strategies, such as tiered caching, can introduce an extra caching layer between the edge and the origin, further reducing server load and optimizing resource use.

By implementing edge caching, websites can achieve lower latency, higher transfer speeds, and a more responsive experience for users across different locations and network conditions.

So, if you want to guarantee the highest speed for your website and the best online experience for your users, Edge Cache is the best solution for those who want a super fast website with high performance.